2026: A Tale of Two Races

Two tech/AI races will define 2026, each involving—or rather gnawing at — the most hallowed aspects of one of the two most talked-about US giants.

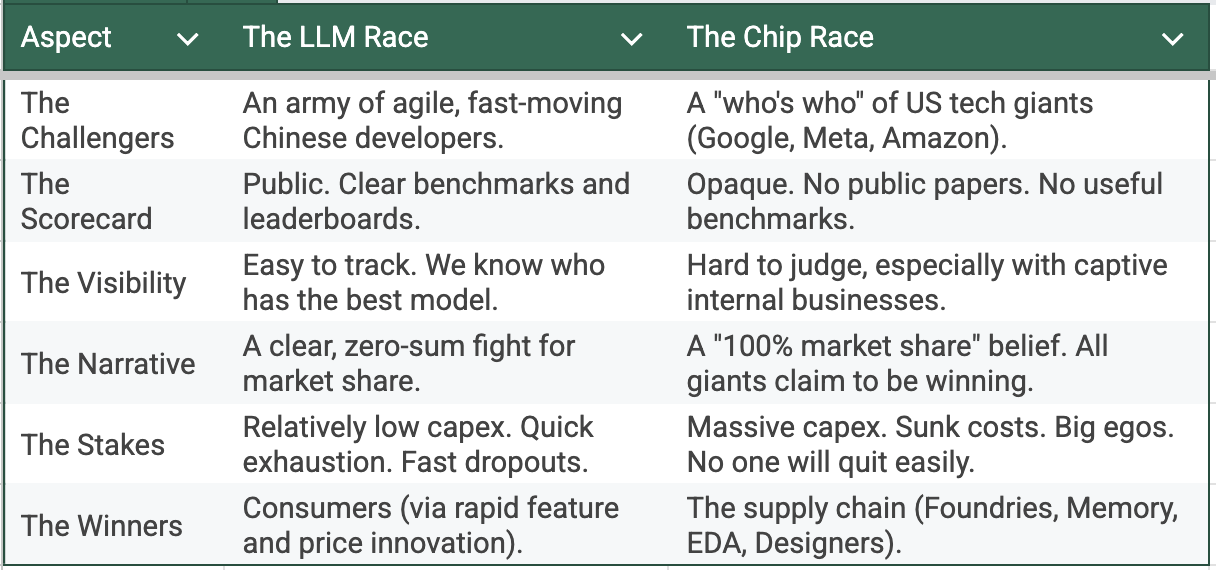

We discussed the first race in large language model (LLM) development last week. It was a domain defined by OpenAI and its peers when we started in 2025. By now, the established model makers are no longer competing with each other; they now face intense pressure from an army of Chinese developers. 2026 promises a rising wave of innovations from all sides, with no guarantees about who will emerge genuinely, profitably, and durably ahead in market share. The only thing certain about 2026 is that there are no “peaking” possibilities.

The second race is far bigger. It is also far more important for investors. This is the race for custom chips. An entire host of tech giants is trying to nibble into NVIDIA's territory. It has become a status requirement. To be a "tech giant" today, you must be building your own silicon. On the surface, the same companies have been in the custom chip business for years. But the pace of progress has shifted sharply in recent weeks.

This piece will cover multiple recent news items that have not received attention in journals and analyses we have read. What makes the news and what gets ignored offer fascinating lessons. Consider the headlines from the first week of November 2025. On the same day when Tesla shareholders approved a potential $1 trillion package for Elon Musk, a different headline emerged from Korea. Samsung Electronics announced a bonus of 515 million won, or approximately US$354,000, for its 30-member High-Bandwidth Memory (HBM) team. The contrast was amusing. But the real story lay in what was unusual. Samsung rarely grants stock bonuses to individual teams. That it did so for its HBM engineers is telling. We definitely need to write about stock compensation in Korea someday.

But, we digressed. The important point is that many quiet developments fail to register the way they should. The Chinese LLM rise, now that it is being noticed, has a near-zero chance of being ignored, especially when it fuels every kind of pessimist—political, economic, market, or tech. Each will have new proofs in their rise of their old conclusions. But, while the LLM contest grabs the spotlight, the silicon contest may decide the scoreboard.

Custom chip players are all giants. Listening to each one’s development individually, from their press conferences or analyst reports, presents one-sided stories that cannot all be right. In fact, our highly indulgent four saying piece got the fodder for the last seemingly random “saying” tale from these giants’ claims of a race where no one expects anyone to lose. 2026 will make clear who’s running and who’s running out of time.

Google's New Playbook: No Longer Just Good Enough

Google has been building custom chips for years. Its Tensor Processing Units, or TPUs, have always been an interesting sideshow, almost exclusively for in-house use. The playbook was simple: TPUs were optimized for Google's own workloads. They offered a better Total Cost of Ownership (TCO) for Google's specific needs. They were not a direct threat to the king. They were a budget-control measure, not a declaration of war.

That script just got torched.

Last Friday, popular media were focused on Tesla’s pay package-related events. The more technology inclined had the pen sharpened to discuss the implications of Kimi’s K2. Maybe we are dramatizing, but the day had Google not launch another TPU but declare war. The announcement of "Ironwood," the TPU v7, was not the usual TCO talk. This was a head-to-head challenge. Google stopped comparing its new chip to NVIDIA's last chip. It put Ironwood's specs directly against NVIDIA's current flagship, the Blackwell B200, which is barely shipping.

Table: Google TPU v7 (Ironwood) vs. NVIDIA Blackwell B200

.png)

The real club is the system. Google's architecture connects over 9,000 chips into a single, massive pod. NVIDIA's current cluster design links 576. They are playing different games at the system level. But what makes this unprecedented isn't on the spec sheet. It's the rhetoric. Google claims Ironwood pods "by far exceed" NVIDIA's best platform. The company secured Anthropic's commitment to deploy up to one million TPU v7 chips. That's not an internal science project. That's an external validation, well-planned and material.

For context, every previous TPU generation played catch-up. TPU v5 chased the A100. TPU v6 was aimed at the H100 territory but launched after the H100 was already established. Ironwood launches alongside Blackwell, claiming leadership. The shift from "alternative" to "superior" matters because it changes customer psychology. If Google—hardly known for humility in cloud marketing—believes it can win head-to-head comparisons, others will pay attention.

The usual caveats apply. Ironwood remains captive to Google Cloud. CUDA's ecosystem makes NVIDIA the default choice for anyone not building at Google's scale. Software flexibility still favors the incumbent by miles. But something shifted in late 2025. The performance gap that justified NVIDIA's premium has just narrowed to a sliver.

And this is before we discuss what everyone else has been building.

From Chipmakers’ Whisper Network

If you want to see the real custom chip war, do not look at the generals. Look at the plumbers. The names that matter are not always on the keynote stage. They are the enablers. The "picks and shovels" companies. Broadcom. Marvell. Alchip. They do not make chips for themselves. They build the escape routes for everyone who wants to buy less from NVIDIA. And business is booming.

Broadcom is the kingmaker. In September 2025, it raised its fiscal 2026 AI revenue forecast to $28-30 billion. This was not a small tweak. It was a massive uplift from a $20 billion guide just three months prior because of the business from Google’s latest TPU, Meta’s MTIA-3 inference chip, and a staggering $10+ billion order from OpenAI for its new custom accelerator.

Staying focussed on those who have been at it for a while: apart from Google above, material progress has been noted elsewhere, too. Amazon's Trainium 2 was reportedly "underperforming" NVIDIA's H100 in mid-2025. Yet, by November, it was a "multibillion-dollar" business growing 150%QoQ on account of a massive contract with Anthropic. Microsoft plays the smartest hand: it is NVIDIA's #1 partner in public while secretly powering its own Copilots with its "Athena" chip. Meta’s MTIA reportedly handles over 30% of its massive Facebook and Instagram inference load.

The New Insurgents: Now with Everyone

The hyperscalers' moves are logical. The new wave of insurgents is what’s truly disruptive. The most discussed is OpenAI, with numbers in the tens of billions. Then there is the flank attack. Qualcomm, which failed at data center chips a decade ago, has also returned in recent weeks and immediately landed a 200-megawatt (MW) deal from Saudi Arabia's Humai for its unreleased AI 200/250 chips. Then, of course, there is Elon Musk. Following his Tesla Dojo playbook, xAI is reportedly pursuing custom chips to power Grok.

Notwithstanding the byline, there are substantial new plans, albeit at lower technology scales, from multiple Chinese giants, including Huawei, Xiaomi, Alibaba, Baidu, and others.

.png)

The Price of Power

“Strong Grace demand.” That was the headline from Jensen Huang’s TSMC appearance this month. Whenever a CEO mentions demand for a product that no one can buy, it’s worth reading between the lines.

Something else was making news the same week: SK Hynix secured a hefty HBM4 price increase. After tense negotiations, reports say NVIDIA accepted terms it had resisted for months. The details are murky—maybe 5–10 percent for existing supply, maybe 50 percent for the new HBM4 generation. Either way, it’s clear who blinked.

The balance of power in memory is shifting. For years, NVIDIA dictated prices as the monopsonist buyer. Now, Google, Amazon, and Microsoft sign their own multi-billion-dollar HBM contracts directly with SK Hynix. When the biggest customers buy direct, the supplier finds its voice. The once-timid vendors are getting bold.

TSMC is no different. It raised advanced-node prices again this year, confident that AI demand is inelastic. Capacity through 2026 is sold out. NVIDIA is still the largest customer, but its share of CoWoS packaging has slipped from 70 percent to roughly 55 percent. The fawning has reversed. TSMC and Hynix know they hold the bottlenecks.

This is why we see NVIDIA pushing for exclusive deals with OpenAI, Tesla, and others—single-supplier, no-outsiders contracts. It is about securing a guaranteed supply, not just guaranteed sales. The lesson from 2025 is that scarcity has become negotiable, and NVIDIA is no longer the one setting the price.

The beneficiaries are the ecosystem players like Broadcom and EDA vendors. They ride the same wave without the same exposure: smaller scale, but cleaner margins. When everyone else is fighting for capacity, those who sell the tools are the only ones sleeping well.

The Courtship Reversal

The balance of power in silicon has inverted, and so have the travel directions of senior managers and deal-makers. Jensen Huang’s trips to Korea and Taiwan are no longer ceremonial. Elon Musk is unusually vocal and repetitive in his admiration for Samsung’s foundries. Everyone, with OpenAI being the other most notable, is inking new long-term deals, and this is when foundries and memory-makers are raising prices at a pace multiple times those in much-discussed utility prices, which are sure to hit all technology products in the years to come.

The upstream majors are breathless about their completely booked capacities. And, they have stopped hesitating in asking for price increases that would have been unthinkable even at the peak of cycles before. Despite a nearly 40% revenue increase, TSMC began the quarter with another double-digit price increase for the most advanced nodes.

The numbers are so eye-popping for the memory makers that the first forecasts of how Hynix profits could surpass TSMC’s in a few quarters have surfaced already. The list of beneficiaries includes many others, including server makers, packagers, and other enablers like Broadcom and the EDA vendors, although with lesser scale benefits.

The War That Matters More to the Market

The chip war matters more than the model war. The LLM race drives headlines. The chip race drives equity values. The first moves minds. The second moves money. It is a fight over capabilities that are impossible to compare, even when announced for their true business impacts, let alone forecast. Materially involved are major stocks that are in everyone’s portfolios. The 2026 Chip War is the real story for global equities.

For now, analysts take every chipmaker’s story as fact. Each claims record share, record growth, record capacity. All can’t grow market share. As 2026 unfolds, it will be clear that global markets are too small for everyone to win. The overestimates in custom chips will matter more than any data center plan or AI forecast.

We are not here to judge who wins. We are here to track evidence. No matter what happens, it is almost given that none of the giants will concede defeat in the foreseeable future after betting their reputation on silicon. The contest will feed on ego and sunk cost. Their war is a gift to others. And while they fight, the quiet suppliers—foundries, memory, and design tools—will keep winning exactly as before.