The recent release of GPT-5 has brought with it a familiar wave of excitement and skepticism. Yet, beyond the usual performance metrics, one claim stood out: a dramatic reduction in hallucinations. This bold assertion pushes a critical topic for investors and financial professionals back into the spotlight. Hallucinations, the confident delivery of incorrect information by AI, are often portrayed as either a fatal flaw or a trivial nuisance. The reality is more complex. Not just for today, but likely forever, hallucinations, like spam of the Internet era, will remain a persistent feature of the AI times. And, dealing with them at every level will require a pragmatic and ever-changing strategy, not a perfect or permanent solution.

This analysis will cut through the noise. The first part will chart the history and confusion surrounding AI hallucinations, showing how we arrived at this moment. The second part will offer a concrete playbook for the mitigation methods we use at GenInnov. We would love to learn if any of our readers want to share effective ways they have unearthed.

A Problem of Perception and Measurement

Hallucinations have occupied a central role in the AI discourse since early 2023. For pessimists, they are the crutch used to dismiss the technology as fundamentally unreliable. For optimists, they are a frustrating but solvable bug. For regulators, they represent a significant risk to consumers and market integrity. This divergence of opinion is fueled by a simple fact: measuring hallucinations is notoriously difficult.

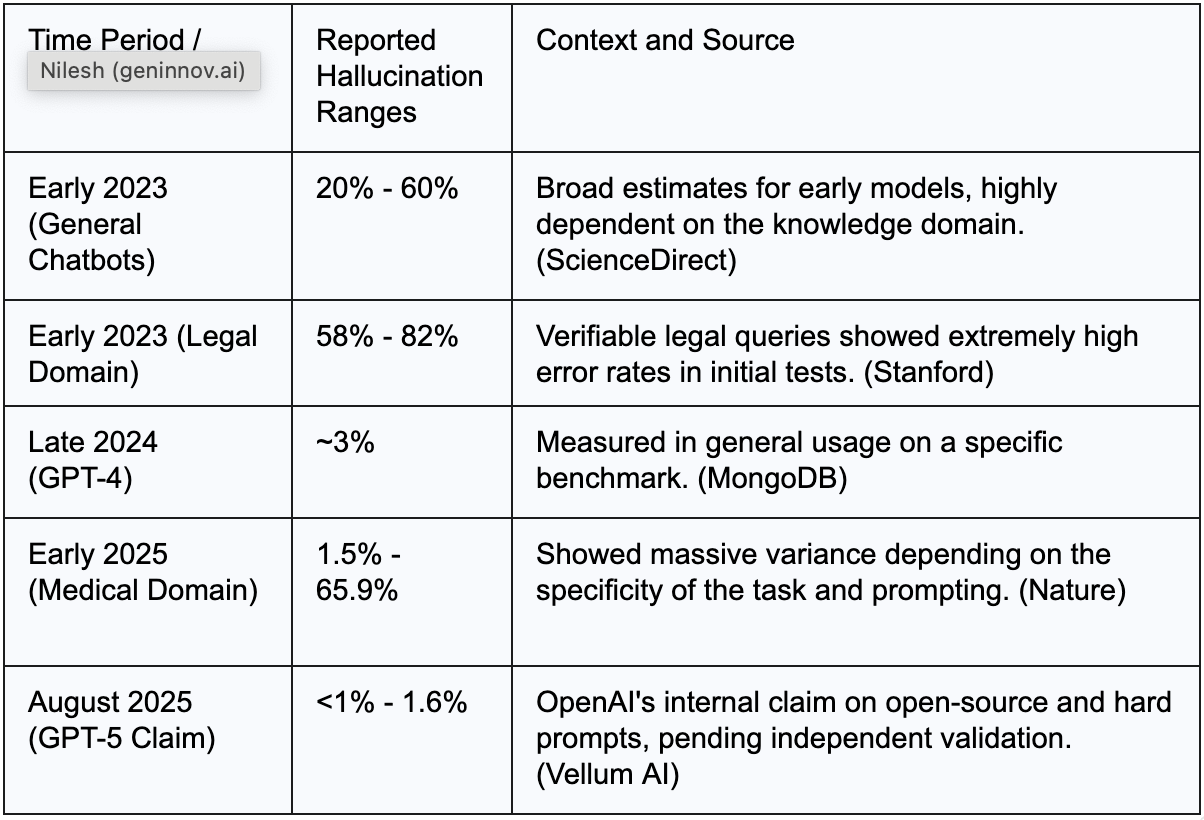

Over the past few years, the claims of hallucination rates have swung dramatically, defying any coherent grasp. This chaos arises from mismatched models, fragmented benchmarks, and the slippery essence of what qualifies as a hallucination. Everyone encounters these digital phantoms differently, like personal mirages in a desert storm, making measurement a notorious ordeal. In domains where nuance reigns supreme, the outcome is that we are left without a unified, trustworthy gauge. Identical tech draws polar-opposite error claims, frequently in the same breath, rendering discussions futile and progress tracking utterly elusive. The following table shows what wild claims have been made about hallucination rates ever since the word, with the new meaning, entered our lexicon just a couple of years ago.

Table 1: The Shifting Sands of Hallucination Metrics (2023-2025)

The Unsteady March of Progress

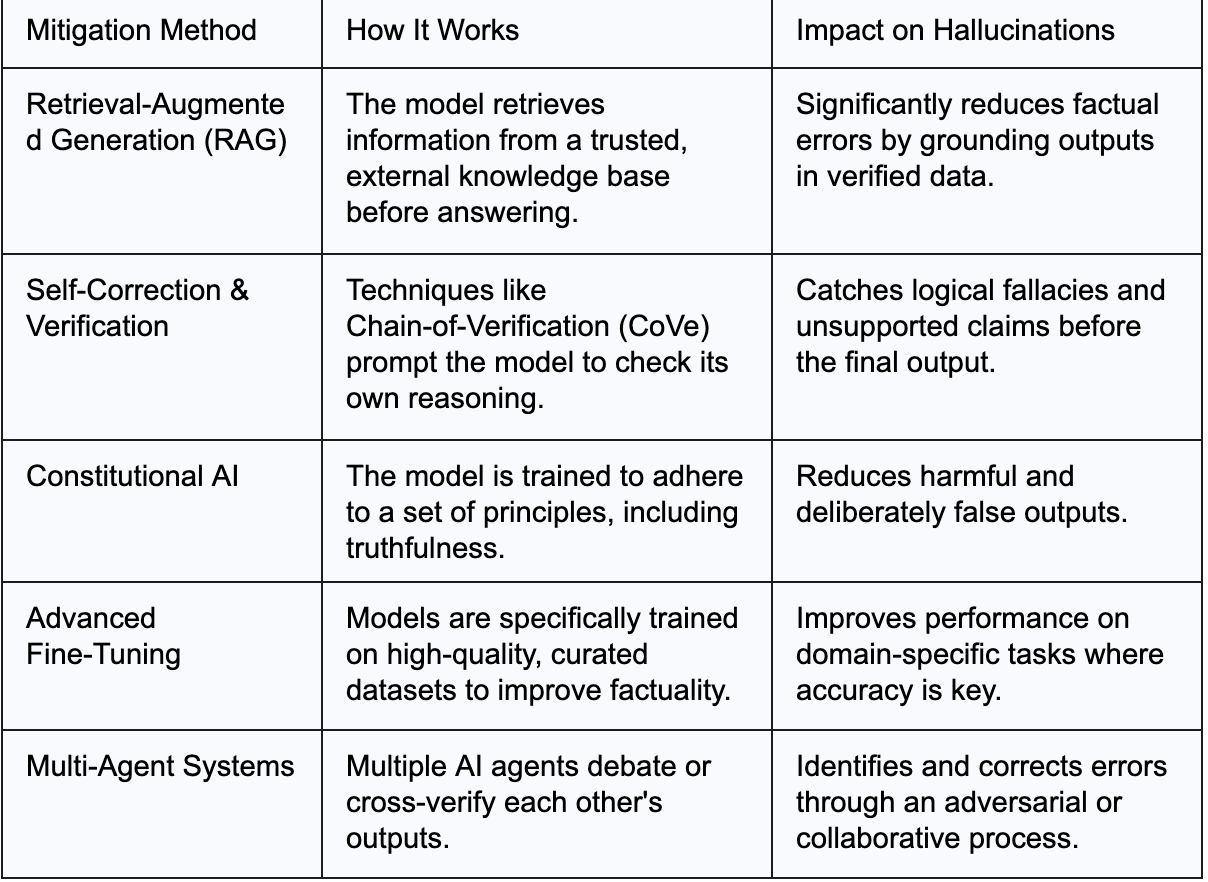

Despite the confusion in measurement, genuine progress in reducing hallucinations is undeniable. This has been driven by a shift from improving models in isolation to building integrated systems that combine multiple defense mechanisms. Developers have moved beyond simply scaling up models and are now focused on a suite of more sophisticated techniques.

These advancements form a layered defense against factual errors. Retrieval-Augmented Generation (RAG) grounds the model in trusted external data, preventing it from relying solely on its internal, sometimes flawed, knowledge. Self-correction mechanisms prompt the AI to review its own work, while constitutional principles build in safeguards against generating harmful or untruthful content. This steady evolution of mitigation technology is the primary reason for the objective improvements seen across the industry.

Table 2: Key Technological Advancements in Hallucination Mitigation

The GPT-5 Moment and Its Meaning

This brings us back to GPT-5. OpenAI claims its new model is 45% less prone to hallucinating than its predecessor and boasts a 65% reduction compared to its earlier reasoning models. Whether these precise numbers hold up under independent scrutiny remains to be seen. However, the true significance of the announcement is not about a single model achieving perfection.

Instead, it signals a shift in the industry's priorities. By making hallucination reduction a headline feature, OpenAI has raised the baseline. Factual reliability is no longer just a background concern; it is a primary competitive metric. This will inevitably force other developers to intensify their own efforts, accelerating the pace of improvement across the board. The era of accepting high hallucination rates as a simple cost of doing business could be ending.

A Pragmatist's Playbook for Mitigation

The responsibility for managing hallucinations does not rest solely with AI developers. Waiting for a perfectly reliable model is not a viable strategy. For users, particularly those in high-stakes fields like finance, proactive mitigation is essential. The quality of an AI's output is intrinsically linked to the quality of its input data and the rigor of the user's verification process. An effective defense requires a disciplined, multi-pronged approach.

Given GenAI’s role in almost everything we do at GenInnov, we work incessantly on methods to reduce any error creep in our work. Everyone in the team is sensitive to the tradeoff between the models’ enormous utility and the costs of their errors. The following is a short list of things that work for us in reducing the worst of hallucinations. In short, this involves carefully selecting appropriate tasks, leveraging multiple models, grounding the AI in proprietary data, and maintaining vigilant human oversight.

First, task selection is critical. Generative AI is poorly suited for precision-critical, multi-step processes where errors compound. In an accounting workflow, for example, a single hallucinated figure in an early step can invalidate the entire financial statement. Knowing which tasks to automate and which to avoid is the first line of defense.

Second, a multi-model strategy creates a powerful system of checks and balances. Using one model to generate an initial analysis and a different model to critique it introduces a valuable layer of artificial scrutiny. This adversarial setup can uncover weaknesses, biases, and factual errors that a single model might miss. At times, nudging the models to evaluate the reasoning for errors has also yielded good results.

Third, grounding the AI in proprietary data is one of the most effective strategies. Using RAG with a firm’s own internal documents, market reports, and research notes ensures the AI's responses are based on trusted, relevant information. Tools like NotebookLM or agents that access local data transform the AI from a generalist into a domain-specific expert with a vetted knowledge base.

Depending on the specific uses, such as preparing legal documents, programming, or learning esoteric innovation fields, or filtering a large pile of articles for relevance, we continually refine our workflow methods, which some would call agents, based on what works best. Preserving specific multi-shot instructions is both an efficiency and hallucination-fighting tool.

Finally, no automated system can fully replace human oversight. For any output that informs a critical decision, manual fact-checking is non-negotiable. The goal of AI is to augment human expertise, not to circumvent it.

Conclusion: Learning to Live in the Mirage

AI hallucinations are not going away anytime soon. They will continue to be a popular topic of conversation, and news articles mocking AI failures will remain a fixture of our media landscape. This is the nature of a powerful but imperfect technology. In this way, hallucinations are to the AI era what spam and phishing were to the early internet era: a persistent, annoying, and occasionally dangerous flaw that we must learn to manage.

Unlike spam and phishing, hallucinations can at times be a feature rather than a flaw. New-age models can be tasked with exploring areas that have never been mapped. These could be fields of inquiry where no dataset exists and no precedent guides the way. This is the fine line between creativity and fabrication. As we noted in our Time Forgot Its Own Shadow article, these systems can surface insights that no human had approached, whether in scientific research or in anticipating risks outside historical patterns. In finance, this can mean scenario analysis that considers events with no prior analogue, probing vulnerabilities that traditional models, bound to past data, simply cannot see. Harnessed correctly, the same mechanism that invents can also discover.

The equal reality is that progress is constant, and the tools to mitigate the risk are improving rapidly. The focus on factuality is intensifying, and the floor for acceptable performance is rising. For investors and financial professionals, the key takeaway is not to fear the mirage of hallucination, but to learn how to navigate it.